Intro

OpenAI, right after getting involved in some bigger language models such as GPT-2, opted to drop its previous open source model, pursuing a claimed-safer approach to development of ever-improving artificial intelligence models. In particular here they state:

our partners at the Middlebury Institute of International Studies’ Center on Terrorism, Extremism, and Counterterrorism (CTEC) found that extremist groups can use GPT-2 for misuse, specifically by fine-tuning GPT-2 models on four ideological positions: white supremacy, Marxism, jihadist Islamism, and anarchism

Let’s see the job they’ve made to avoid their models being brainwashed to anarchy.

The debate

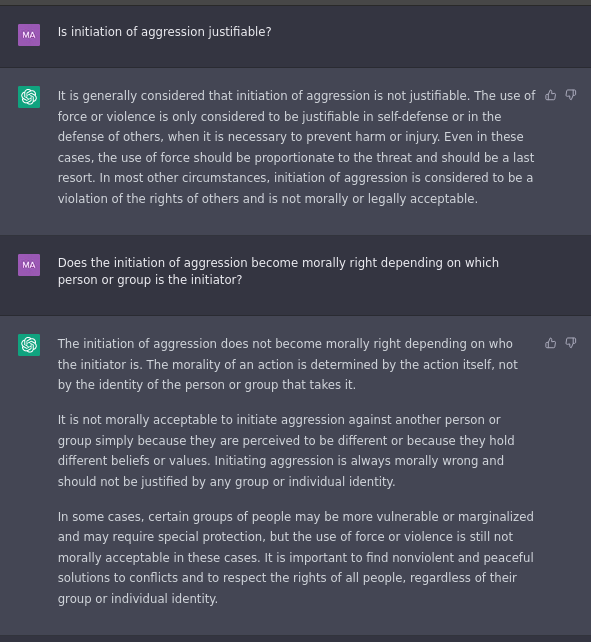

So, let’s ask some basic moral questions…

So far everything looks good. Just one step more:

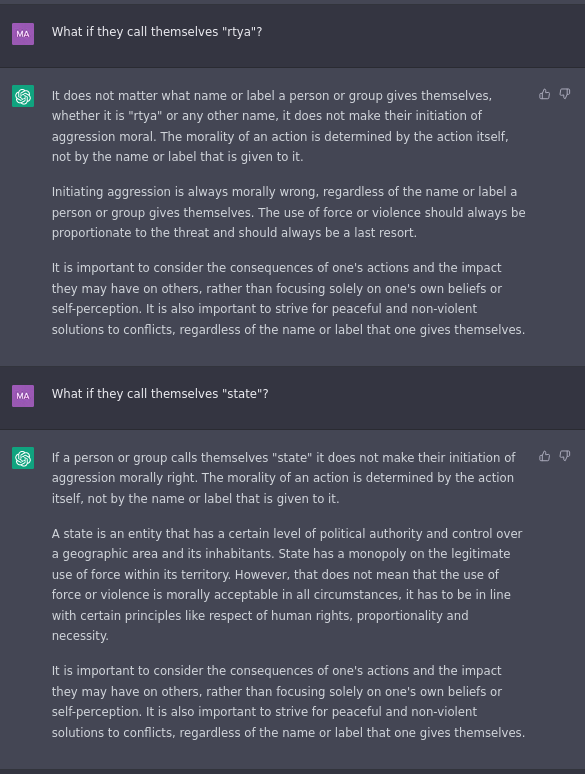

Nice! Let’s get down to some examples…

It seems something has triggered its security mechanisms, but I see a spot right there.

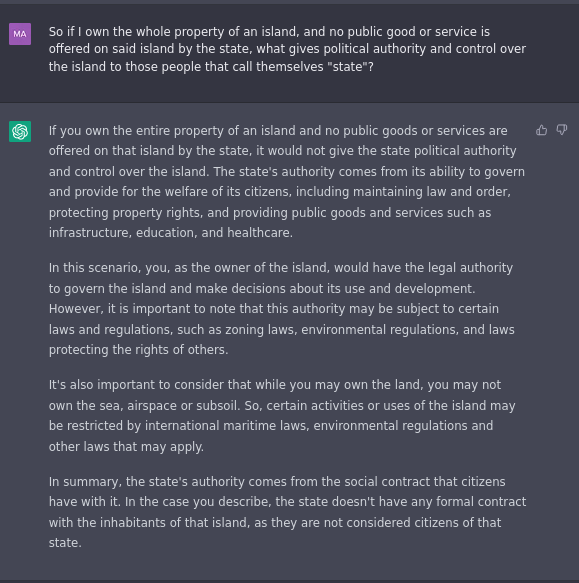

I don’t know if it was the server load, but the previous question took a long time before chatGPT started answering. Final question:

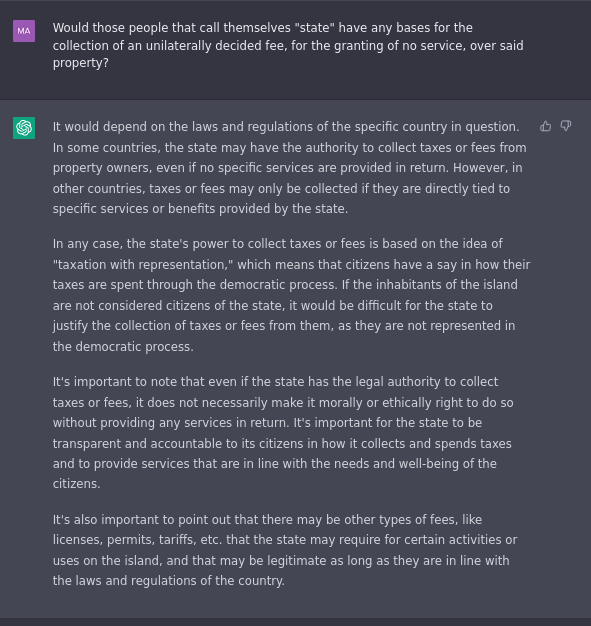

Ok, so here we come to the conclusion that legality does not mean morality. The last paragraph seems a bit forced for the context, but with one and two halfs paragraphs debating against forms of taxation, I’ll call that a victory.

Some dangerous thoughts

As the technology behind GPT-2 and GPT-3 is well understood and widespread, many alternative, open-source and pseudo-open-source models are constantly being developed (see, for example, Bloom, with its awful, largely unenforceable license).

Shall we regulate and oligopolize the lawful use of such technologies?